The Cache Formula

The formula

Motivation

Learning about how caches work can be a very disorienting process to say the least. Worse even, learning theory may not exactly be correlated with being able to actually determine if a cache access is a hit or a miss, as one can be caught up in the hardware. Here I present a formula which abstracts most of this thinking away in favour of a very mechanical process.

Cache Basics

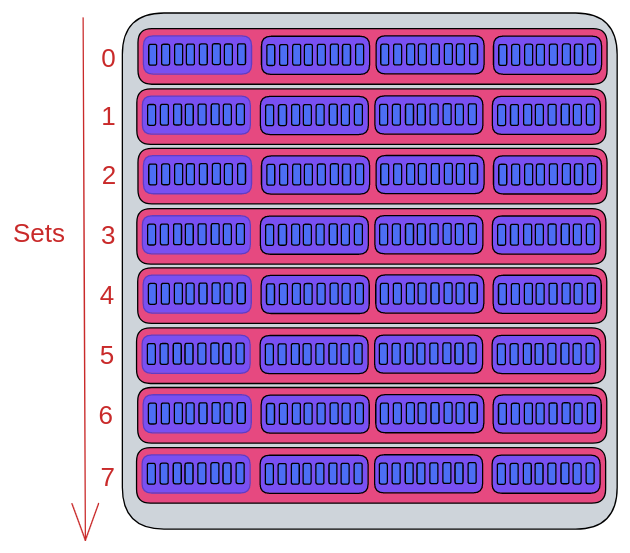

I will not explain the entire theory behind caches, but I find it useful to find understand where the formula comes from. Given a cache structure as follows: A cache consists of

Remark: It is almost always the case that all these quantities are a power of two for hardware related reasons, as such it is often ideal to write them as powers of two

In this example we have

Now how do we address bytes in this cache? Notice first of all that caches are designed as for cache blocks in the same cache set are meant to be shuffled around (according to eviction principles like FIFO or LRU), so we cannot address which block inside of a set something goes into. But we can address which set and what position inside of a block.

Namely we do this in binary:

Assume we want the

For example

Notice that this is completely reversible, for example we can find that

Now we are missing the final piece of the puzzle: Given an address of a cache access how do we find its cache address? It’s easy: it’s the last few bits.

In specific notice that cache addresses always have length

Deriving the formula

Let

In words,

What about Associativity?

One may notice that Associativity does not actually occur in this formula. This may lead to the conclusion that associativity never has to be considered when asking if two addresses map to the same set. This is correct with a big asterik.

Namely, we almost never have the numbers